Archive for the ‘commercial open source’ Category

Next Big Thing: The Data Explosion

These are the main points from The Data Explosion section of my Next Big Thing In Big Data presentation.

- The data explosion started in the 1960s

- Hard drive sizes double every 2 years

- Data expands to fill the space available

- Most data types have a natural maximum granularity

- We are reaching the natural limit for color, images, sound and dates

- Business data also has a natural limit

- Organizations are jumping from transactional level data to the natural maximum in one implementation

- As an organization implements a big data system, the volume of its stored data “pops”

- Few companies are popping so far

- The explosion of hype exceeds the data explosion

- The server, memory, storage, and processor vendors do not show a data explosion happening

Summary

- The underlying trend is an explosion itself

- The explosion on the explosion is only minor so far

- Popcorn Effect – it will continue to grow

Please comment or ask questions

5 Out Of 6 Developers Are Using Open Source

ZDNet reports on a Forrester survey that finds 5 out of 6 developers are using or deploying open source.

In the survey they found that 7% of developers are using open source software tools such as Pentaho.

The United States Department of Labor state that, in 2010, there were 913,100 software developers in the USA alone.

http://www.bls.gov/ooh/Computer-and-Information-Technology/Software-developers.htm

7% of 913,100 means about 64,000 developers using open source business intelligence software. Nice.

I Never Owned Any Software To Begin With

My thoughts on the whole Emily White/stealing music topic:

http://www.npr.org/blogs/allsongs/2012/06/16/154863819/i-never-owned-any-music-to-begin-with

http://thetrichordist.wordpress.com/2012/06/18/letter-to-emily-white-at-npr-all-songs-considered/

When she says she only bought 15 albums, I think she is talking about physical CDs. I think she did buy some of her music online. But she clearly states that she ripped music from the radio station and swapped mix CDs with her friends, and she makes it sound like she thinks this is not stealing.

Don’t Blame iTunes

Many people who complain about artist’s income people blame Apple and iTunes. Yes, iTunes propagated the old economic splits and percentages into the digital world. But Apple did not create those splits, they were agreed upon in contracts between the labels/producers and the artists. What iTunes did was to provide an alternative digital distribution medium to Napster. Apple saved artists from the prospect of getting no revenue at all. People who attack and boycott iTunes thinking that they are helping artists are deluded.

It’s Not Just Music

This whole debate also extends to movies, books, news commentary, and software – anything that can be digitally copied. In each of these arenas, the players and economic distribution is different, but the consequence of not paying is the same. If we all behaved this way, ultimately, there would be no books, or movies either. So how does this relate to proprietary software, open source software, and free software?

Proprietary Software

Just like companies that publish books, music, movies etc, proprietary software companies were the gatekeepers. They decided what software was created and made available. When the hardware and software becomes available at the consumer level, independent producers spring up. This happened with freeware software for PCs. The internet enables the distribution of the software, and methods of collecting payment. The costs of creating books, music, and movies have dropped dramatically because of the hardware and software now available. But, if no-one pays for the content created the proprietary software companies will go out of business.

Non-Proprietary

Open source and free software are other ways for creating and distributing software, the difference being that these rely on software (source and binaries) being easy to copy. Don’t steal Microsoft’s BI software and use it without permission. Use our open source BI software – we want you to.

Free Software

Free software requires that the software, and all software that is built upon it, be ‘free’. In this case ‘free’ means you can freely modify it, distribute it, and build upon it, and you give others those same rights. You can still charge for the software, but it makes no sense to (given the rights you give to your ‘customer’).

The ideals of Free Software Foundation (FSF) are based on the notion that when you think of something or invent something, it belongs to the world, you don’t own it. This is a wonderful idea, however most of the world, including many industries,and jobs, and professions, are based on the opposite principle – if you create it, you own it. To my mind I have fewer rights under the FSF view of the world, I don’t have the right to my own ideas.

Because of the freedoms that the Free Software Foundation believe in, they are against Digital Rights Management (DRM) software. DRM tries to protect the rights of artists, producers, and distributors of artistic content. In order to protect these rights, software is needed that is proprietary. If the DRM source code was open, it would make it easy for hackers to decode the content and remove the copy protection. So the Free Software Foundation is taking up the fight against DRM, calling it ‘Digital Restrictions Management’ (http://www.fsf.org/campaigns/drm.html). They call it this because, they say, DRM takes away your right to steal other people’s inventions. If you support of DRM-free software, you are choosing to fight against musicians, authors, actors.

Open Source

The Open Source movement takes a pragmatic approach on this topic. When you have an idea, it is yours. You can choose to do whatever you want with your invention. If it is a software invention, and you choose to put it into open source, that’s great. If you choose not too, that’s fine too, because it is yours. Open Source allows hybrid models – where a producer can decide to put some of their software into open source but not all of it (open core or freemium model). This model enables a software producer to provide something of value to people who would not have paid for anything anyway (this includes geographies and economies where the producer would not sell anyway). These people are willing participants and contributors in other ways. The producer also gets to sell whatever software products it wants.

Doomsday?

For some creative areas, if no-one pays for any content anymore, the creators will disappear eventually, and there will be no more content. But what happens if no-one pays for software anymore?

Proprietary software dies eventually, unless they switch to services models.

The majority of people contributing to open source/free software today are IT developers. There are two main types here: creating/extending/fixing software in the course of getting their project finished, or sponsored contributors. IT is where the majority of software developers are today, so IT/enterprise/business software is safe.

The software that would be at most risk would be software that is created by smaller software companies. Particularly software that has large up-front development costs. Games. The first, and maybe only, software segment to die would be the big-budget, realistic, immersive, loud video games. Who cares most about these games? The same demographic that is stealing all the music.

I say let Generation OMG copy and steal everything they want. All the really cool and fun careers will evaporate. Lots of the stuff they love (movies, music, games) will disappear. After they have spent a decade texting each other about how sucky everything is, they will grow up and have to re-create these industries. Hopefully with better economic structures than the current ones.

Pentaho and DataStax

We announced a strategic partnership with DataStax today: http://www.pentaho.com/press-room/releases/datastax-and-pentaho-jointly-deliver-complete-analytics-solution-for-apache-cassandra/

DataStax provides products and services for the popular Apache No-SQL database Cassandra. We are releasing our first round of Cassandra integration in our next major release and you can download it today (see below).

Our Cassandra integration includes open source data integration steps to read from, and write to Cassandra. So you can integrate Cassandra into your data architecture using Pentaho Data Integration/Kettle and avoid creating a Big Silo – all with a nice drag/drop graphical UI. Since our tools are integrated, you can create desktop and web-based reports directly on top of Cassandra. You can also use our tools to extract and aggregate data into a datamart for interactive exploration and analysis. We are demoing these capabilities at the Strata conference in Santa Clara this week.

Links

- Product downloads, how-to videos and documents are available at http://www.pentaho.com/cassandra and http://www.datastax.com/pentaho

- Attend the webinar on March 15th to learn more and about using Cassandra’s integration with Pentaho Kettle http://www.pentaho.com/datastax-webinar

- Download, access how-to documents and videos at http://community.pentaho.com/BigData

olap4j V1.0 has been released.

Back in the ’90s and early 2000’s I was involved in the attempts by the proprietary BI vendors to create common standards. Anyone remember JOLAP? The vendors were doing this only because of increasing demand and frustration from their customers – none of them actually these standards. Why? Because, in the short term, standards would only help the customers and the implementers, not the vendors. These efforts were hugely political with many of the vendors taking the opportunity to score points against each other. The resulting ‘standards’ were useless, and none of the large vendors were willing, or able, to support them.

How refreshing, then, to have olap4j reach the 1.0 milestone – http://www.olap4j.org. Created by consumers and producers of open source BI software, olap4j shows the advantage of open collaboration by motivated parties. Already olap4j has a Mondrian driver, and an XMLA driver for Microsoft SQL Server Analysis Services, SAP BW, and Jedox Palo. There are also several clients who use olap4j servers, some from Pentaho, and Saiku, Wabit, and ADANS.

olap4j is very cool stuff. You can read more on Julian Hyde’s blog. Congratulations for everyone that has worked on olap4j.

More Hadoop in New York City

Yesterday was fun. First I met with a potential customer looking to try Hadoop for a big data project.

Then I had a lengthy and interesting chat with Dan Woods. Amongst other things Dan runs http://www.citoresearch.com/ and also blogs for Forbes. We talked about Pentaho’s history and our experiences so far with the commercial open source model. We also talked about Hadoop and big data and about the vision and roadmap of our Agile BI offering.

Next I met with Steve Lohr who is a technology reporter for the New York Times. We talked about many topics including the enterprise software markets and how open source is affecting them. We also talked about Hadoop, of course.

Next was a co-meet-up of the New York Predictive Analytics and No-SQL groups where I presented decks about Weka and Hadoop, separately and together. There were lots of interesting questions and side discussions earlier. By the time we finished all these topics a blizzard was going on out side. Cabs were nowhere to be seen so Matt Gershoff of Conductrics was kind enough to lead me via the subway to the vicinity of my hotel.

Big Data in New York City

I’m having an interesting time in NYC this week. I had to retrieve my snowboarding jacket out of the attic for this trip. It’s snowing right now, which is better than the sleet forecast for later. So far I’ve met with a few Big Data customers and prospects and presented at the New York Hadoop User Group. Our hybrid database/Hadoop data lake architecture always gets a good reception and our ability to run our data integration engine within the Hadoop data nodes impresses people.

Being the first Business Intelligence vendor to bring reporting and ETL to the Hadoop space sets us apart from all the other vendors. We have so much recognition in this space that I’ve spoken to a few people in the last month who thought we were ‘THE’ visualization and data transformation provider for Hadoop and didn’t connect to other data sources.

This afternoon I’m meeting with reporters and columnists from a couple of different publications to chat about Big Data / Hadoop stuff. Tonight I’m presenting at the New York Predictive Analytics Meetup to talk about Hadoop from an analysis perspective.

Meetups and Pentaho Summit(s) coming up in January

It’s going to be a busy month.

January 19th and 20th is our Global 2011 Summit in San Francisco. I have three sessions Pentaho for Hadoop, Extending Pentaho’s Capabilities, and an Architecture Overview. So I’m creating and digging up some new sample plug-ins and extensions. I’m also going to take part in an Q&A session with the Penaho architects since Julian Hyde (Mondrian), Matt Casters (PDI/Kettle), Thomas Morgner (Pentaho Reporting) will all be there. Who should attend?

CTOs, architects, product managers, business executives and partner-facing staff from System Integrators and Resellers, as well as Software Providers with a need to embed business intelligence or data integration software into your products.

We usually have customers and prospects attending our summits as well.

We are also having an architect’s summit that same week to work on our 2011 technology road-map. That should be a lot of fun.

The week after that I’ll be in New York presenting at the NYC Hadoop User Group on Tuesday, January 25 and the NYC Predictive Analytics Meetup on Wednesday January 26th.

150,000 installations year-to-date for Pentaho

Our most recent figures show 156,000 copies of Pentaho software were installed so far this year. These numbers are not download numbers, but installed software that has been used. This includes Pentaho servers and some Pentaho client tools. These numbers do not represent only long-term installations, but also do not represent all Pentaho’s software distributions or installations. Since these numbers are not absolutel

An analysis by country of these numbers shows interesting results.

The Long Tail

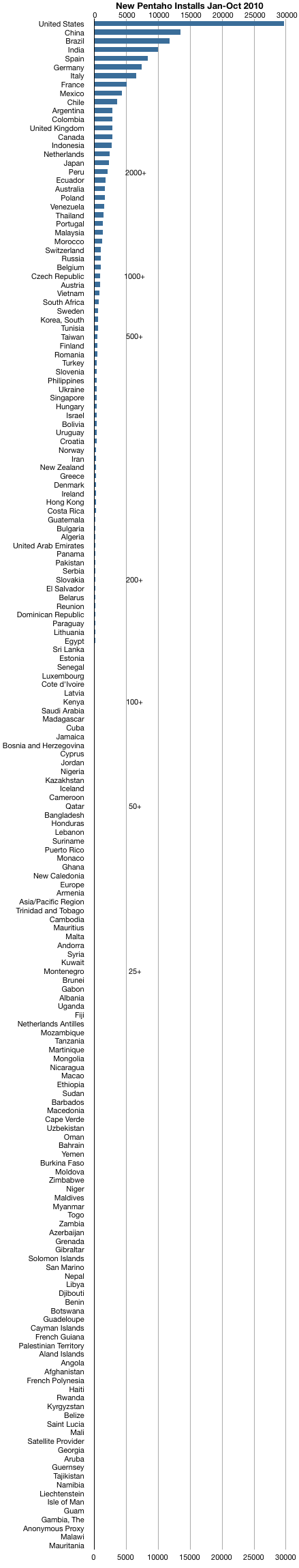

This chart shows the number of new installations year-to-date for each country. Our data shows new Pentaho installations in 176 countries so far this year. That’s out of a total of 229 countries.

This is clearly a classic long tail. In fact after the first 20 or 30 countries it is difficult to read values from the chart. This second chart uses a log scale. The line on this chart is almost perfectly linear, showing that the distribution by country is pretty much logarithmic.

This is clearly a classic long tail. In fact after the first 20 or 30 countries it is difficult to read values from the chart. This second chart uses a log scale. The line on this chart is almost perfectly linear, showing that the distribution by country is pretty much logarithmic.

Over the same time period Pentaho has customers in 46 countries. This is a larger geographic spread than most of the proprietary BI companies.

Over the same time period Pentaho has customers in 46 countries. This is a larger geographic spread than most of the proprietary BI companies.

Since we are dealing with country-based data, here is the analysis I did using Google Geo Map, Pentaho Data Integration, and Pentaho BI Server.

New Pentaho Installs Jan-Oct 2010

This shows the geographic spread of the installations.

It is fairly obvious from the map above that the highest number of installations were in the USA, China, and Brazil, followed by India and parts of Europe. But this simplistic graphic does take into account the economics or demographics of the countries. How does the number of installations relate to the size or economic power of each country?

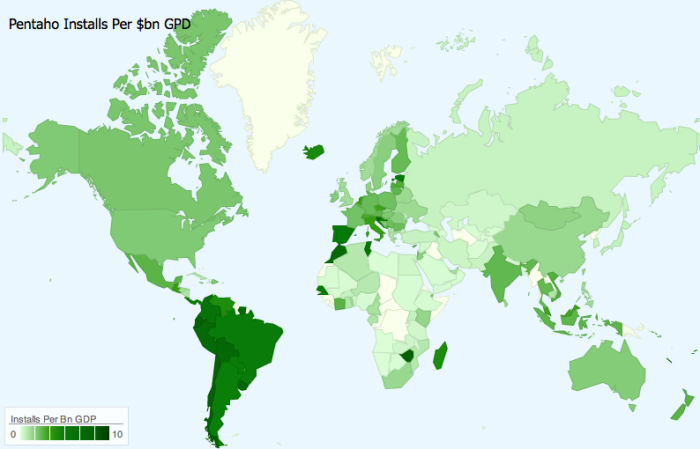

New Installations Per $Billion GDP

If we look at the number of new installations of Pentaho software per billion dollars of GDP we see a different picture. The GDP data is from the CIA World Factbook

I capped ‘Installs Per Bn GDP’ at 10 to prevent outliers from skewing the color gradient.

Compared with the first map the prominence of the USA and Chine is reduced, and the areas of high activity are shown to be South America followed by Europe and parts of Asia. But analysis using GDP alone does not take into account things like exchange rates and the cost-of-living within a country – as a result there is probably a bias towards countries like the South American ones. So I went to find metrics that should remove bias of economic factors.

New Installations Per 100k Labor Force

If we look at the number of new Pentaho installs compared with the labor force of each country we get a slightly different picture. The labor force data is from the CIA World Factbook

I capped ‘Installs Per 100k Labor Force’ at 50 to prevent outliers from skewing the color gradient.

Compared with the first two maps, this one shows the South America, Europe, and North America countries roughly equal to each other. Australia and New Zealand are also comparable. Asia, Africa, and the Middle East are shown to be generally behind. What is odd about this graphic is that countries like India, generally considered to be significant open source consumers, are not shown to be within the leading countries. This is because, I’m assuming, that a large percentage of the labor force is agricultural, and as such, less likely to be doing much BI.

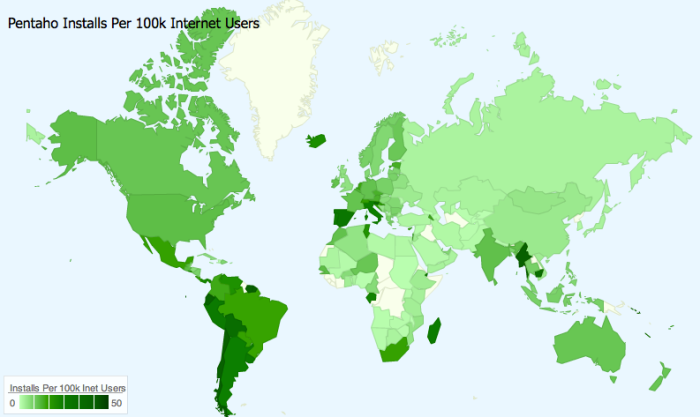

New Installations Per 100k Internet Users

So instead of labor force, let’s look at new installations of Pentaho for every 100k internet users within a country.

I capped ‘Installs Per 100k Internet Users’ at 50 to prevent outliers from skewing the color gradient.

Here we see that South America is still prominent, along with southern Europe. The rest of Europe and North America come second along with India, other parts of Asia and Australia. South Africa also makes a showing for the first time. China however does not show strongly.

This metric – Installations per 100k Internet Users – seems like a reasonable way to compare the adoption of software between countries. ‘Internet Users’, by definition, have access to a computer (needed to run FOSS) and to the internet (needed to get FOSS). This metric is not skewed by the percentage of the population that are not internet users, and is not skewed by cost-of-living or exchange rates.

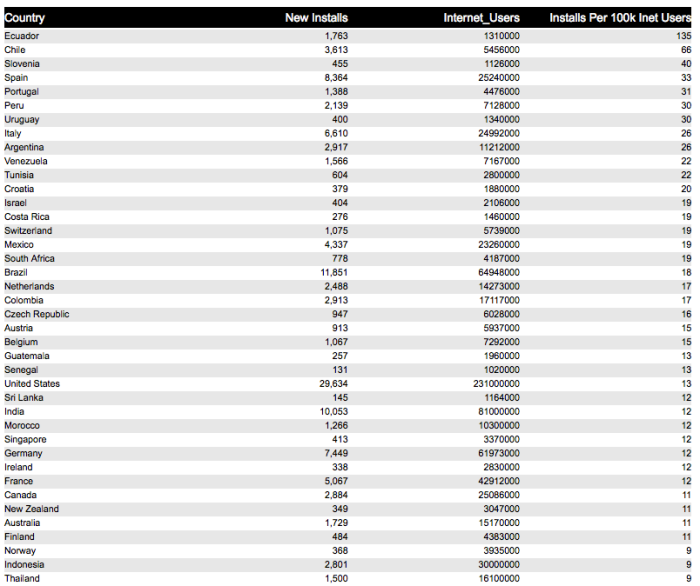

Here are the top 40 countries for new Pentaho installations per 100k internet users ( for countries with over 1 million internet users).

There is a bias still. Countries with a lower percentage of internet users in the total population will be rated higher than those with very high percentage. This is because in the first case, the individuals with internet access will tend to be those in business, i.e. those with a higher than normal need for BI tools. Whereas in the second case the internet users include relatively more families and individuals – those with a lower need for BI tools. This bias would not affect the installation figures of software such as Firefox, but would affect the ratings in Pentaho’s case.

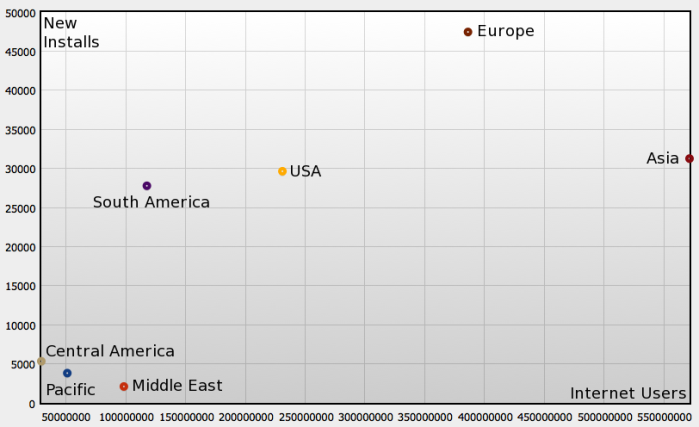

If we group the countries into regions we see some other interesting things. This scatter chart shows number internet users on the X axis and new installations of Pentaho software on the Y axis.

Interestingly the USA, South America, and Asia come out with around the same total number of installations (approx 30,000), but the chart shows a large difference (100m up to 550m) in the number of internet users within those regions. Europe, as a region, has the highest number of new installations, with a 50% margin over the second place region.

So which metric do you think is most valuable? And for what purpose?

Also interesting to note is that the 2010 installations numbers represent, for each country, 40-50% of the all-time (2007-2010) installation figures. This means that the number of new installations so far in 2010 is about the same as the number of installations in the previous 3 years combined.

About This Analysis

And yes, I used Pentaho software to do this analysis – I used Pentaho’s Agile BI process.

- Iteration 1: I first loaded the ‘new installations’ data into a table to do the histograms and the first map. After seeing the map, it occurred to me that just looking at the installation figures was not very interesting, and that comparing installations to GDP might be better.

- Iteration 2: So I went to find GDP data and added it to the table using Pentaho Data Integration. After seeing the ‘Pentaho Installs Per $dn GDP’ map it occurred to me that other metrics might show different, and better, results – so I went to find other data sets, not knowing what I might find.

- Iteration 3: At the CIA World Factbook I found Labor Force and Internet Users. I added these to the table and looked at the maps. At this point I decided that comparing installation counts with the total number of internet users in a country was a good metric.

It took three iterations of finding data, merge/calculate/load, and visualization before I settled on an analysis I thought was optimal. The important point here is that until I saw the data visualized, the next question did not occur to me, so a one-pass ‘requirements’ -> ‘design’ -> ‘implement’ -> ‘visualize’ process would not have worked.

Comparing open source and proprietary software markets

An excellent post on decisionstats.com in response to Jason Stamper’s CBR interview with SAS CEO Jim Goodnight highlights some issues that arise when you try to compare open source, commercial open source, and proprietary software in the marketplace.

The issues arise because the economics of the offerings are so different that traditional measures don’t work as well as they used to. As pointed out on decisionstats the commercial open source offerings (as yet) don’t amount to much when you compare revenue $ on paper.

There are several problems when attempting an analysis like this.

1. Stale Metrics

A few years ago the analysts of the operating systems market were confused. Everywhere they went they saw Linux machines in the server room. But every time they surveyed the market their results showed that Linux had a 2% market share. Their statistical data did not match the real world they witnessed. Why? They were asking the wrong question.

I’ll illustrate this problem using another domain. Let’s say we compare the actual usage of automobiles (vs unit sales) by looking at the total amount of gasoline bought by their owners. Up until a while ago the differences in mileage consumption between different vehicles was small enough to be safely ignored. But if you use that metric today you’ll come to the conclusion that the hybrid cars are not driven very far each year, and the electric cars are not driven at all. Clearly ‘gasoline spend’ is the wrong metric today, but would have been ok in the past.

Let’s switch back to software. After the Red Hat purchase of JBoss I received a survey from a well known analyst company. They were trying to gauge market reaction to the purchase. Here are two sample questions:

- How much did you spend on JBoss licenses last year?

- How much do you expect to spend on JBoss licenses next year?

My truthful answer to these questions would have to be $0. JBoss does not have a license fee. It is purchased on a subscription basis. There were other questions about spending on JBoss vs WebSphere and WebLogic. Even if I answer based on subscription dollars $1 of JBoss buys me much more than $1 of WebSphere, so dollar for dollar comparisons aren’t valid.

For most commercial open source companies the subscription is around the same as the annual maintenance for the equivalent proprietary software – with no up front license fee. Let’s say the proprietary software is $100k with 20% maintenance. So the first year revenue is $12ok, and $20k per year after that. The (approximately) equivalent commercial open source software will have $0 license fee and an annual subscription of $20k or less per year. Over three years the proprietary software would cost $160k and the commercial open source software would be $60k – roughly 3 times cheaper. Independent assessments show the savings to be greater than that but I’ll stick to 3x for now.

Any company using pure open source or a community edition will report even lower figures when it comes to software spend.

The analysts in the operating systems market now ask many more questions about how many CPUs are deployed, instead of just questions about money. Some even ask about Linux variants like Fedora. They ask these questions because they have learned that they don’t have a clear picture of the market without them.

Each analyst decides what questions they want to ask. In the BI space, to date, most of the questions are about spending, not usage.

Hidden Utilization

Just after Sun acquired MySQL Jonathan Schwartz (Sun CEO) took Marten Mikos (MySQL CEO) with him on a visit to the CIO of a large financial services company. The CIO was pleased to meet Mickos but didn’t know why he was there – ‘we don’t use MySQL’ he said. Mickos informed the CIO that there had been over 1000 downloads of MySQL by employees in his company. At this point the IT director informed his CIO that they used MySQL all over the place. Ask a CIO how much open source software is used in his company and you will often get an underestimate – sometimes wrong by many orders of magnitude.

Factoring in Community Edition

There is another problem with questions asked about software expenditure – it assumes that the open source software has no value outside of a subscription.

Many commentators on software markets won’t include data about companies who use software but do not have a support agreement. I assume the logic is that if you don’t have a support agreement, you’re not really serious about it. Maybe its just because it’s a lot easier to analyze this way. But at some point it becomes like analyzing the travel market and saying that if you don’t use a travel agent you’re not really serious about traveling, and therefore don’t count. At some point the analysis no longer reflects reality.

The most popular open source software such as Firefox, OpenOffice, MySQL, Linux etc are used by thousands or millions of people. Look at the effect of the Apache HTTP server on that market. How can you assume that this usage does not affect the market share across that market segment?

So usage of open source BI is hard to ignore, but also hard to quantify. Maybe we factor it in under a new metric.

Intangible Market

There is a portion of any given software market that is intangible because open source software is being used. This intangible market is not monetized by the commercial open source company – it is not revenue or bookings. It is value (or savings if you prefer) that the market derives from the open source software.

Here is one way to estimate the size of the ‘Intangible Market’ of a commercial open source company that makes $10m in revenue:

- These companies deliver functionality about 3-5 times cheaper than the proprietary software vendors – let’s use 3

- These companies get about 70% of their revenue from subscriptions – a factor of 0.7

- The ratio of community installations to customer installations is between 1:5000 and 1:100 – let’s use 1:100

- The same number of installations, under a proprietary model, would generate $10m x 3 x 0.7 x 100 = $2.1 bn

So is the ‘intangible market’ of a $10m commercial software company really over $2bn? This seems a little extreme, but then so does assuming that usage of community edition software has zero impact. The $2.1bn is clearly strongly affected by assuming that every installation of the community edition has the same economic weight as a paying customer. So let’s mitigate. Many of these installations are:

- At deployments where there is no software budget for BI tools.

- In geographies where none of the proprietary vendors offer sales or support.

- In economies where exchange rates make the proprietary offerings non-viable

These installations represent a new market that has not been included before. But assuming a dollar for dollar exchange with the ‘old’ market is not reasonable. On the other hand many of these installations are:

- Are at deployments of SMB and enterprise companies with BI budgets.

- In North America, Europe and other regions where proprietary vendors offer sales or support.

- In economies where the proprietary offerings are frequently purchased.

So how do we balance these? I’m going to go with a factor of 0.1. Which says an installation of open source BI software (with no support contract) is effectively worth 10c where a supported commercial open source package or proprietary package would be worth $1.

So a commercial open source company with $10m in annual revenue has an intangible market of $10m x 3 x 0.7 x 100 / 10 = $210m. Overall these factors comes out to 21 x annual revenue.

So there you have it. My initial estimate of a generic FOSS Intangible Market Factor (FIMF) is 21. To be accurate you’d need to estimate the FIMF for each open source offering within a given market.

Then again, I’m a code jockey, not an analyst. Maybe they can estimate each of these factors more accurately.